Mastering Image Creation from Text with Python and AI

Written on

Introduction to Text-to-Image Generation

The realm of artificial intelligence has advanced remarkably, particularly in converting text into visuals. This guide delves into the intriguing field of text-to-image generation through a diffusion-based generative model, a robust pre-trained model that enhances creative possibilities.

Overview of the Model

The stabilityai/stable-diffusion-xl-base-1.0 model serves as the core for generating and altering images based on written prompts. It utilizes a Latent Diffusion Model, which incorporates two fixed pre-trained text encoders: OpenCLIP-ViT/G and CLIP-ViT/L.

- Developed by: Stability AI

- Model Type: Diffusion-based text-to-image generative model

Setting Up Your Environment

Before diving into the coding aspect, ensure your environment is primed for action. Install the latest versions of the essential libraries: diffusers, transformers, safetensors, accelerate, and the invisible watermark. To do this, open your terminal and execute the following commands:

pip install diffusers --upgrade

pip install invisible_watermark transformers accelerate safetensors

Loading the Model in Python

With the environment set up, it's time to load the stabilityai/stable-diffusion-xl-base-1.0 model into Python. The following code snippet initializes both the diffusion pipeline and the refiner models:

pipe = DiffusionPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0",

torch_dtype=torch.float16,

use_safetensors=True,

variant="fp16"

)

pipe.to("cuda")

refiner = DiffusionPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-refiner-1.0",

text_encoder_2=pipe.text_encoder_2,

vae=pipe.vae,

torch_dtype=torch.float16,

use_safetensors=True,

variant="fp16",

)

refiner.enable_model_cpu_offload()

Crafting Your Prompt

Next, we need to provide our model with a descriptive input to generate an image. Here’s a sample text prompt:

# Sample text input

prompt = "A vibrant sunset over the city skyline with silhouetted buildings."

The prompt variable contains the descriptive text guiding the model's image creation. It is the creative spark that shapes the visual outcome.

To refine results, consider using a negative prompt to exclude certain elements. For example:

# Negative prompt (optional)

negative_prompt = "Avoid including any water elements in the scene."

Incorporating a negative prompt grants additional control over the generated image, allowing you to specify elements to omit, thus customizing the output further.

Generating Images from Text

Now, the thrilling part — generating images based on our input text. The following code snippet illustrates how to create a visual representation from text:

# Generate image from text

images = pipe(prompt=prompt, negative_prompt=negative_prompt).images[0]

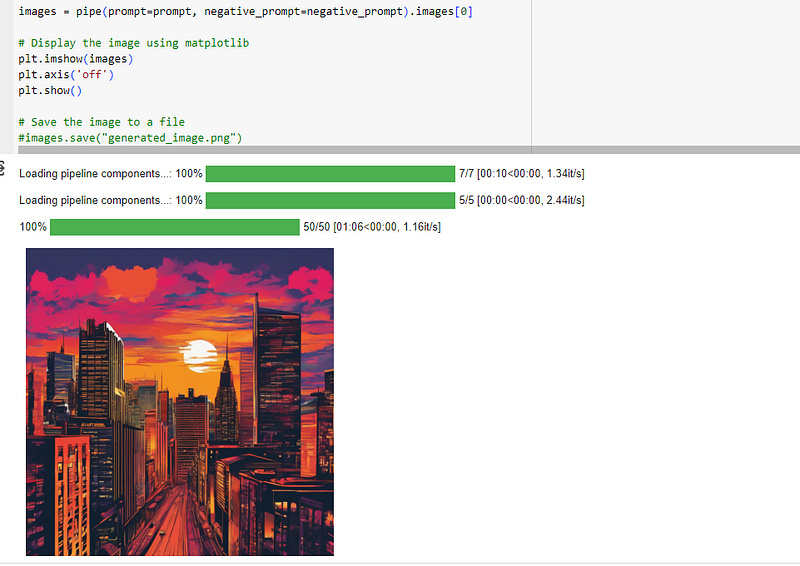

Displaying and Saving Your Creation

Once the image is generated, you can display it using Matplotlib and save it if desired:

# Display the image using matplotlib

plt.imshow(images)

plt.axis('off')

plt.show()

# Save the image to a file

# images.save("generated_image.png")

The command plt.imshow(images) displays the generated image, while uncommenting the last line allows you to save it to a file.

Best Practices for Text-to-Image Generation

As you explore the world of text-to-image generation, consider these tips:

- Experiment with various text inputs for diverse results.

- Adjust parameters for the creativity level you desire.

- Continuously iterate and refine your text descriptions for optimal outcomes.

- Enhance your textual inputs to effectively guide the model in crafting visually stunning images.

Conclusion

The advent of text-to-image generation models allows us to bridge the gap between words and visuals, seamlessly translating textual descriptions into striking images. This technology not only enriches the field of AI but also inspires creativity in ways previously unimagined.

Having taken your initial steps into text-to-image generation, embrace the creative process of transforming your ideas into visuals. Share your experiences and creations in the comments, as we collectively push the limits of AI's capabilities.

Happy coding!

In this video, you will learn how to create images from text using the OpenAI API and Python.

This beginner-friendly tutorial covers using Python, OpenAI, and DALL-E 2 to generate images.