Discovering Hugging Face's New Diffusers Library for AI Models

Written on

Chapter 1: Introduction to Diffusers

Hugging Face, renowned for their transformers library, has unveiled a groundbreaking library tailored for constructing diffusion models. If you're unfamiliar with diffusion models, they serve as the essential framework behind some of the year's most buzzworthy AI creations.

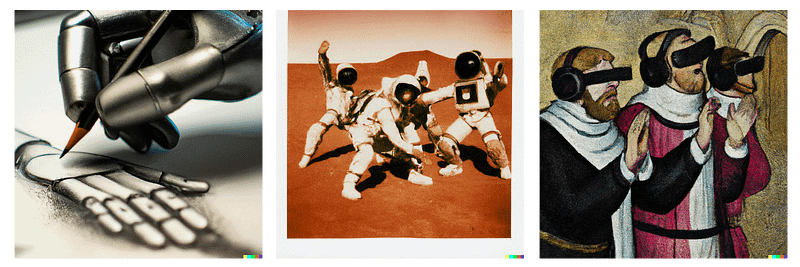

The stunning, artistic images you've encountered online are likely products of platforms like OpenAI's DALL-E 2, Google's Imagen, and Midjourney, all of which utilize diffusion models for their image generation.

Hugging Face now offers an open-source library focused on diffusers, allowing users to download and create images with just a few lines of code. This newly launched library has transformed these highly intricate models into something far more user-friendly. In this article, we'll delve into how this library operates, generate some images, and compare our results with those from the leading models mentioned earlier.

If you prefer visual learning, check out this video walkthrough:

Chapter 2: Getting Started with Diffusers

To get started, install the diffusers library using pip and initialize a diffusion model or pipeline. This typically includes preprocessing and encoding steps, followed by the diffusion process. For our example, we'll employ a text-to-image diffusion pipeline.

Next, we can create a prompt and run it through our model pipeline. Inspired by Hugging Face's introductory notebooks, we will generate an image of a squirrel munching on a banana.

The process is remarkably straightforward. Although the resulting image may not rival the creations of DALL-E 2, it showcases the capability of producing images using just five lines of code and at no cost. If that doesn't impress you, I don't know what will!

Here's another rendition of a squirrel enjoying a banana:

Chapter 3: The Art of Prompt Engineering

An intriguing trend has emerged since the introduction of popular diffusion models (DALL-E 2, Imagen, and Midjourney) — the art of "prompt engineering." This involves crafting prompts to elicit specific outcomes. For instance, users have discovered that adding phrases like "in 4K" or "rendered in Unity" can enhance the realism of images produced by these models, even if they don’t actually generate images in 4K resolution.

What happens if we apply similar techniques with our basic diffusion model?

Although the generated images may appear quirky, with some odd banana placements, the model does display commendable detail in certain areas, such as the reflection on the banana in image one.

As we explore further, we note that models like "CompVis/ldm-text2im-large-256" are becoming contenders in the creative space, posing a challenge to traditional photographers and artists.

Chapter 4: Experiments in Rome

Currently residing in Rome, I couldn't resist the temptation to visualize an Italian enjoying pizza atop the iconic Colosseum, despite the summer heat being less than ideal.

While we may not be literally on top of the Colosseum, the model’s output is commendable. The architectural details look impressive, although there is a slight inconsistency with the sky's color.

Our Italian figure, complete with sunglasses that evoke a 90s dad vibe, is engaging, although the image lacks diversity. Notably, the model did not generate any representations of women or individuals from varied backgrounds.

Understanding biases within this model, as well as future models hosted by Hugging Face, will be crucial as we move forward.

Chapter 5: Abstract Concepts and Challenges

Returning to our squirrel theme, trying to generate more abstract images, like "a giant squirrel destroying a city," presents mixed results.

The model appears to struggle when combining two seemingly unrelated concepts: a giant squirrel and an urban landscape. This difficulty is evident in the two generated images from the same prompt, which either depict a city skyline or an oversized squirrel in a more natural setting.

For comparison, here’s what DALL-E 2 produces from the same prompt:

While all of these outputs are impressive, we must acknowledge that we cannot expect identical performance levels between these different models, at least not yet.

Chapter 6: Conclusion and Future Directions

This marks our initial exploration of Hugging Face's new library. I am genuinely excited to see how this library evolves. Currently, the most advanced diffusion models are often proprietary, and this open-source framework could be a key to unlocking new realms of AI-driven creativity.

While this library may not yet rival DALL-E 2, Imagen, or Midjourney, its existence enriches the landscape by providing varied options between commercial and open-source solutions.

These open-source models empower everyday users to access the latest advancements in deep learning. When a broad audience experiments with innovative technology, remarkable outcomes are often the result.

I look forward to seeing where this journey leads. For more insights, feel free to join me on YouTube or engage with the vibrant ML community on Discord.

Thank you for reading!

References

[1] DALL-E Instagram

[2] Demographics of Italy (2019), UN World Population Prospects

Hugging Face Diffusers on GitHub