The Future of Technology: Deep Learning with Air Bubbles

Written on

Chapter 1: A Glimpse into the Future

As I approach my front door, fatigue washes over me. Today marked a poignant farewell to Elon Musk, filled with moments of joy and sorrow. In his honor, many attendees indulged, but I’m beginning to regain my clarity.

Elon Musk (1978–2070) Inventor, Entrepreneur, Visionary

Having just experienced a dizzying hyperloop ride, my surroundings feel surreal. I struggle to recall my entry code and hope I’m at the right place. Thankfully, the door features an unobtrusive panel of translucent glass that recognizes me without needing a password or electricity. It merely requires my face, reminiscent of my mother’s familiarity.

This remarkable glass panel is equipped with advanced deep learning capabilities. On its reverse side are five facial images, including mine, which must be illuminated to signal my arrival. Shortly, it will unlock the door and activate the climate control system.

Section 1.1: Understanding Smart Glass Technology

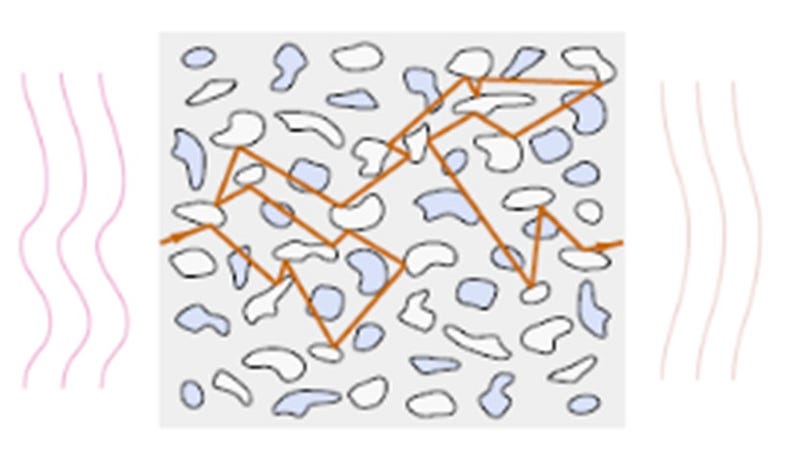

This isn't your typical glass. It's a smart glass engineered for deep learning. Within its one-millimeter thickness, air bubbles are strategically positioned to replicate a neural network. Unlike the layered neural networks from 2019, these bubbles are arranged in a seemingly random pattern.

When light passes through the glass, it interacts with the bubbles, scattering in various directions. A larger bubble redirects it downward, while a tiny bubble refocuses it back toward the front.

The fundamental concept of this optical medium has remained consistent since its initial proposal fifty years ago in a 2019 Journal of Photonics Research article from the University of Wisconsin Madison and MIT. Their groundbreaking work laid the foundation for smart glasses embedded with AI.

Air bubbles serve as linear scatterers, dispersing light in a consistent manner. However, deep learning requires nonlinear activation to function effectively. In traditional neural networks, this is achieved through 'softmax' and 'RELU' functions, acting like gates that only allow information through when certain thresholds are met.

To incorporate nonlinear characteristics into the glass, it includes a mix of nonlinear materials such as optical saturable absorbers and photonic crystals. Once a light ray encounters these nonlinear regions after traversing several air bubbles, it can only continue if its intensity surpasses a set limit, otherwise, its journey ends.

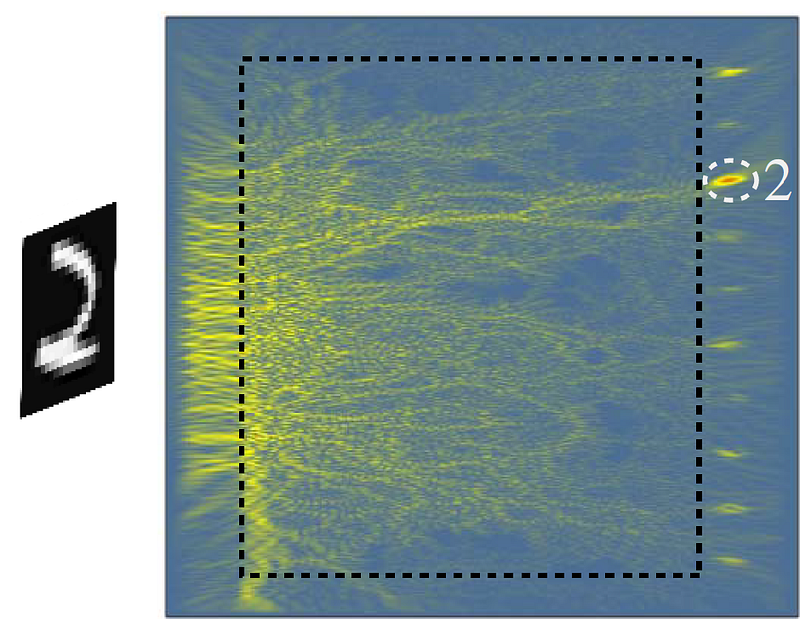

In 2019, the researchers demonstrated that their glass could recognize handwritten digits, a task still relevant today with the enduring use of the MNIST dataset!

For instance, when an image of the digit '2' is directed at the glass, the rays are manipulated by the air bubbles until they converge at the corresponding label.

The team could hardly have envisioned the manufacturing precision achieved today, allowing for the placement of bubbles with nanometer accuracy.

It's time to step away from the glass and enter my home; the evening air is thin and disorienting. Yet, I can't help but ponder the logistic regression cross-entropy function related to this material. Remarkably, it parallels that of any digit recognition algorithm, except now the gradient descent optimizes the medium's dielectric constant rather than an abstract value in a digital neuron.

It's astonishing that in just fifty years, these analog neural networks have become ubiquitous, functioning without power and operating at the speed of light. Their computation is inherent to their structure, akin to an organism possessing a specialized organ for deep learning.

I should really head inside now, but I hesitate, anticipating the unusual moment when my shadow splits into two as Deimos rises in the sky. In the distance, I can see ten rocket boosters landing in perfect harmony. Musk's legacy may have ended, but his impact remains.

I finally push the door open and step inside.

Chapter 2: The Unfolding of Machine Learning

In this insightful video titled "How To Build A Machine Learning Model With No-Code," the viewer is guided through the process of developing machine learning models without the need for programming skills, showcasing the accessibility of this technology.

The second video, "Machine Learning Everywhere feat. Pete Warden | Stanford MLSys Seminar Episode 31," dives into the pervasive nature of machine learning in modern applications, featuring insights from an expert in the field.